通过 Cilium 的能力实现 Kubernetes 集群连接在一起来构建一个网状集群,在所有集群之间启用 pod-to-pod 连接,定义全局服务来平衡集群之间的负载,并执行安全策略来限制访问。

官方文档:https://docs.cilium.io/en/stable/network/clustermesh/clustermesh/#enable-clustermesh

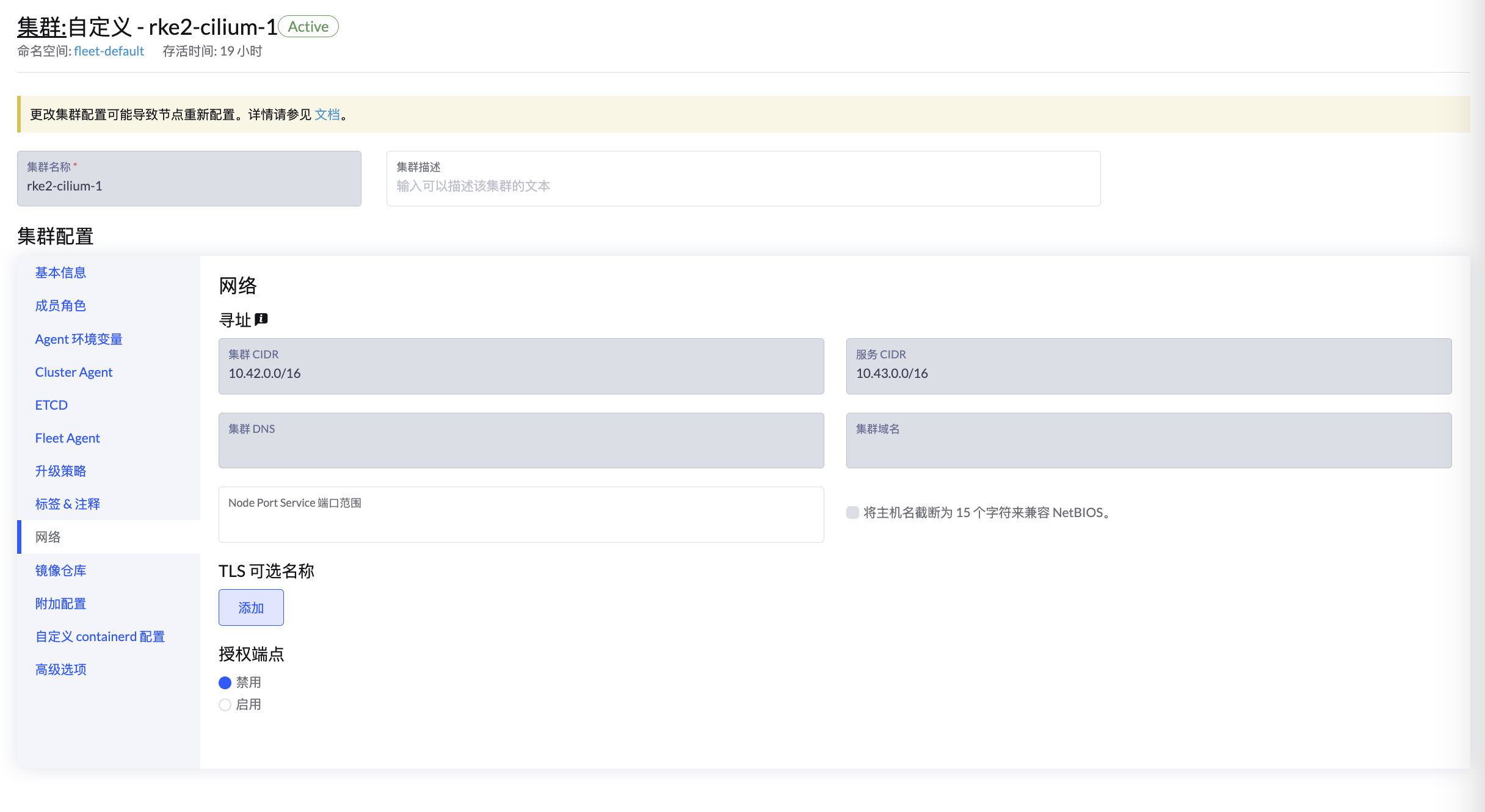

首先需要创建两个 Cilium 集群,两个集群的 Cluster CIDR 和 Service CIDR 不能冲突:

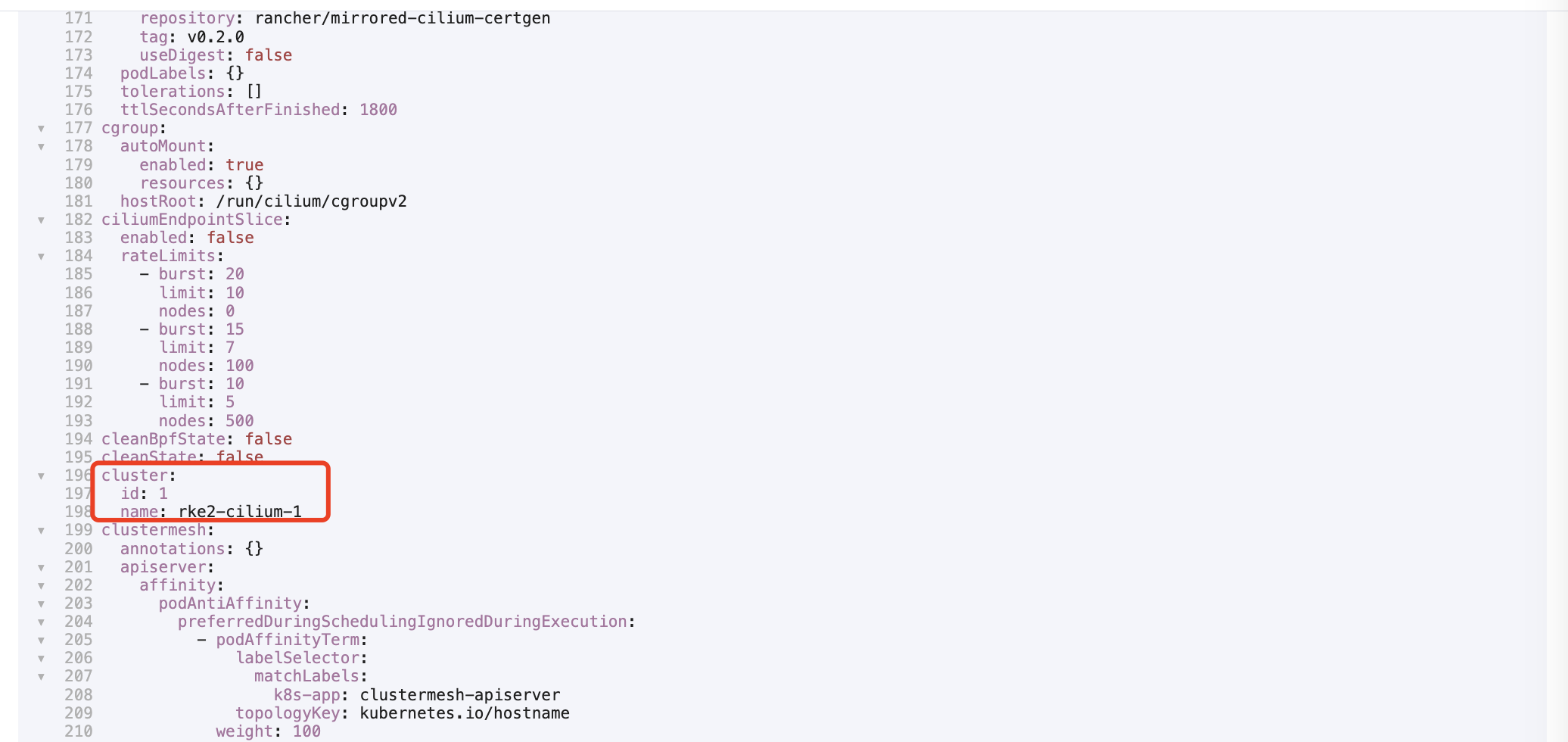

在附加配置中,设置两个集群的 Cilium Cluster ID 和 Name,也不能够冲突:

集群创建好后,在两个集群创建一个 SVC,用于将该集群的 API server 对外暴露:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 cat <<EOF | kubectl apply -f - apiVersion: v1 kind: Service metadata: name: apiserver namespace: kube-system spec: ports: - name: port-6443 port: 6443 protocol: TCP targetPort: 6443 selector: component: kube-apiserver type: NodePort EOF

分别在两个集群的 Control Plane 节点配置 kubeconfig,添加对端集群的配置,server 地址可以配置刚刚创建好的 Service NodePort,例如:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 apiVersion: v1 clusters: - cluster: certificate-authority-data: xxx server: https://172.16.16.140:30645 name: rke2-cilium-1 - cluster: certificate-authority-data: xxx server: https://172.16.16.141:32460 name: rke2-cilium-2 contexts: - context: cluster: rke2-cilium-1 user: rke2-cilium-1 name: rke2-cilium-1 - context: cluster: rke2-cilium-2 user: rke2-cilium-2 name: rke2-cilium-2 current-context: rke2-cilium-1 kind: Config preferences: {}users: - name: rke2-cilium-1 user: client-certificate-data: xxx client-key-data: xxx - name: rke2-cilium-2 user: client-certificate-data: xxx client-key-data: xxx

安装 Cilium CLI:

1 2 3 4 5 6 7 CILIUM_CLI_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/cilium-cli/main/stable.txt) CLI_ARCH=amd64 if [ "$(uname -m) " = "aarch64" ]; then CLI_ARCH=arm64; fi curl -L --fail --remote-name-all https://github.com/cilium/cilium-cli/releases/download/${CILIUM_CLI_VERSION} /cilium-linux-${CLI_ARCH} .tar.gz{,.sha256sum } sha256sum --check cilium-linux-${CLI_ARCH} .tar.gz.sha256sumsudo tar xzvfC cilium-linux-${CLI_ARCH} .tar.gz /usr/local/binrm cilium-linux-${CLI_ARCH} .tar.gz{,.sha256sum }

分别在两个集群开启 Cluster Mesh:

1 2 3 4 5 cilium clustermesh enable --context rke2-cilium-1 --service-type NodePort --helm-release-name rke2-cilium cilium clustermesh enable --context rke2-cilium-2 --service-type NodePort --helm-release-name rke2-cilium

执行该命令后在 kube-system 下会生成 Deployment clustermesh-apiserver,其状态正常则代表开启成功,也可以通过下面的命令检查:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 root@test-0:~# cilium status --context rke2-cilium-1 /¯¯\ /¯¯\__/¯¯\ Cilium: OK \__/¯¯\__/ Operator: OK /¯¯\__/¯¯\ Envoy DaemonSet: disabled (using embedded mode) \__/¯¯\__/ Hubble Relay: disabled \__/ ClusterMesh: OK DaemonSet cilium Desired: 1, Ready: 1/1, Available: 1/1 Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1 Deployment clustermesh-apiserver Desired: 1, Ready: 1/1, Available: 1/1 Containers: cilium Running: 1 cilium-operator Running: 1 clustermesh-apiserver Running: 1 Cluster Pods: 15/15 managed by Cilium Helm chart version: Image versions cilium harbor.warnerchen.com/rancher/mirrored-cilium-cilium:v1.16.2: 1 cilium-operator harbor.warnerchen.com/rancher/mirrored-cilium-operator-generic:v1.16.2: 1 clustermesh-apiserver harbor.warnerchen.com/rancher/mirrored-cilium-clustermesh-apiserver:v1.16.2: 3

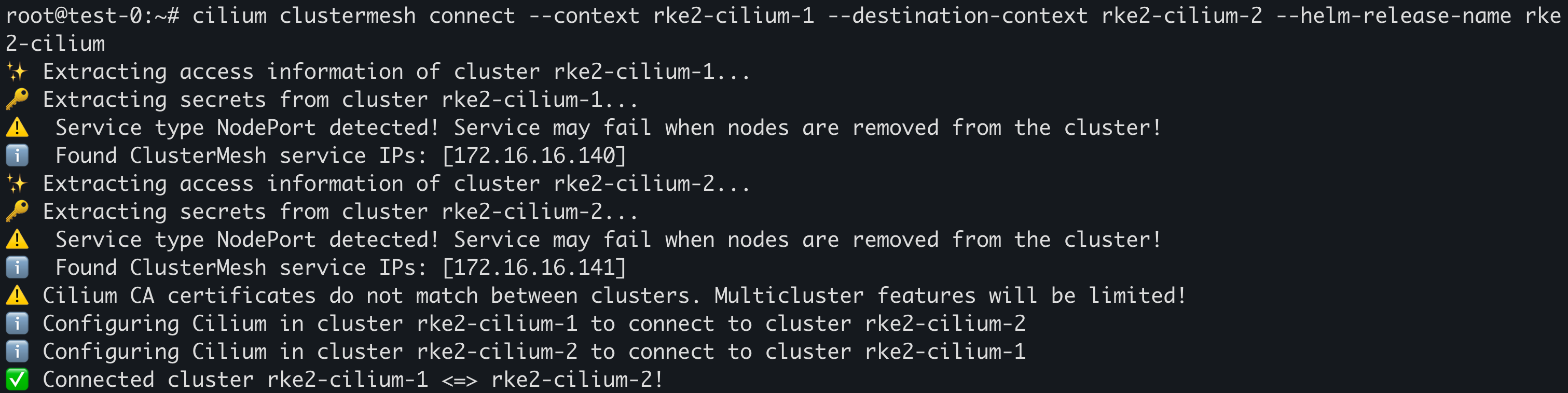

建立集群连接:

1 2 3 4 5 cilium clustermesh connect --context rke2-cilium-1 --destination-context rke2-cilium-2 --helm-release-name rke2-cilium cilium clustermesh connect --context rke2-cilium-2 --destination-context rke2-cilium-1 --helm-release-name rke2-cilium

日志如下则代表开启成功:

也可以通过下面的命令检查:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 root@test-0:~# cilium clustermesh status rke2-cilium-1 ⚠️ Service type NodePort detected! Service may fail when nodes are removed from the cluster! ✅ Service "clustermesh-apiserver" of type "NodePort" found ✅ Cluster access information is available: - 172.16.16.140:32379 ✅ Deployment clustermesh-apiserver is ready ℹ️ KVStoreMesh is enabled ✅ All 1 nodes are connected to all clusters [min:1 / avg:1.0 / max:1] ✅ All 1 KVStoreMesh replicas are connected to all clusters [min:1 / avg:1.0 / max:1] 🔌 Cluster Connections: - rke2-cilium-2: 1/1 configured, 1/1 connected - KVStoreMesh: 1/1 configured, 1/1 connected 🔀 Global services: [ min:1 / avg:1.0 / max:1 ]

如果连接 Cilium Pod 失败可以尝试重启 Cilium 解决:

1 kubectl -n kube-system rollout restart ds cilium

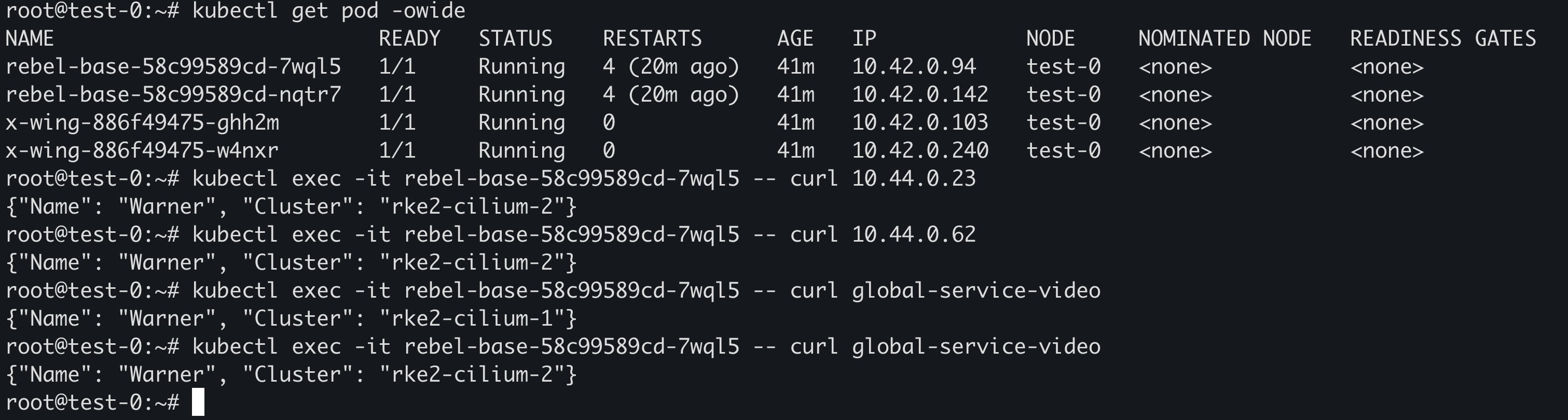

部署 Demo 进行测试:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 cat <<EOF | kubectl apply -f - apiVersion: v1 kind: Service metadata: annotations: service.cilium.io/global: 'true' name: global-service-video spec: ports: - name: port-80 port: 80 protocol: TCP targetPort: 80 selector: name: rebel-base --- apiVersion: apps/v1 kind: Deployment metadata: name: rebel-base spec: selector: matchLabels: name: rebel-base replicas: 2 template: metadata: labels: name: rebel-base spec: containers: - name: rebel-base image: harbor.warnerchen.com/library/nginx:mainline volumeMounts: - name: html mountPath: /usr/share/nginx/html/ livenessProbe: httpGet: path: / port: 80 periodSeconds: 1 readinessProbe: httpGet: path: / port: 80 volumes: - name: html configMap: name: rebel-base-response items: - key: message path: index.html --- apiVersion: v1 kind: ConfigMap metadata: name: rebel-base-response data: # 需要替换此处的这里的 Cluster 为对应的集群名称 message: "{\"Name\": \"Warner\", \"Cluster\": \"rke2-cilium-1\"}\n" --- apiVersion: apps/v1 kind: Deployment metadata: name: x-wing spec: selector: matchLabels: name: x-wing replicas: 2 template: metadata: labels: name: x-wing spec: containers: - name: x-wing-container image: quay.io/cilium/json-mock:v1.3.3@sha256:f26044a2b8085fcaa8146b6b8bb73556134d7ec3d5782c6a04a058c945924ca0 livenessProbe: exec: command: - curl - -sS - -o - /dev/null - localhost readinessProbe: exec: command: - curl - -sS - -o - /dev/null - localhost EOF

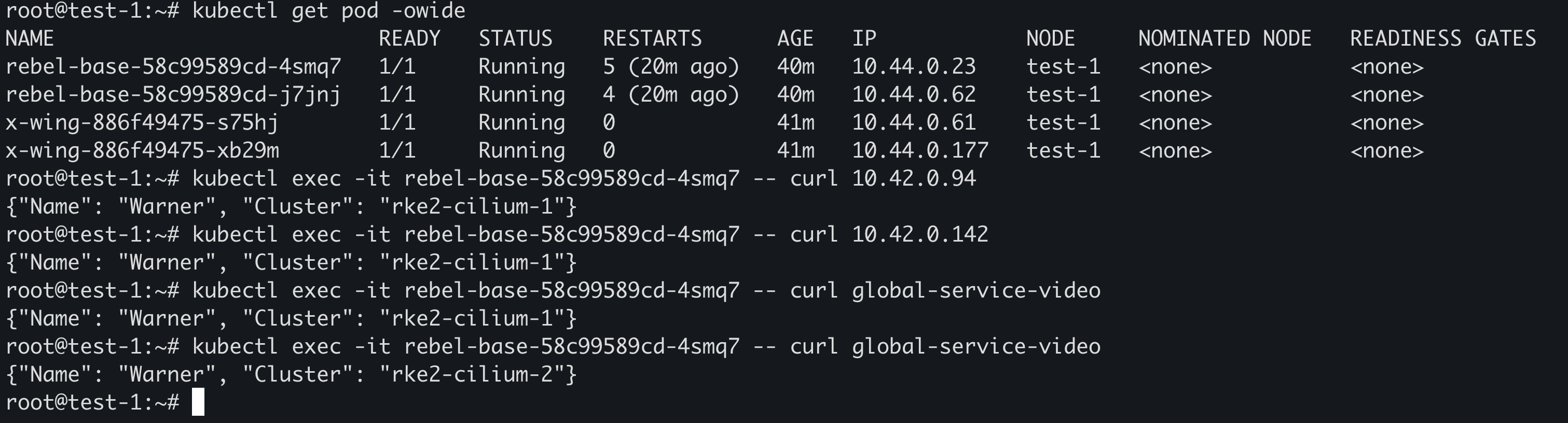

可以直接通过 Pod IP/Global Service 进行通信: