安装单点的 RKE2 该 RKE2 作为 local,用于部署 rancher

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 mkdir -pv /etc/rancher/rke2cat > /etc/rancher/rke2/config.yaml <<EOF token: my-shared-secret tls-san: - 172.16.170.200 system-default-registry: registry.cn-hangzhou.aliyuncs.com debug: true EOF curl -sfL https://rancher-mirror.rancher.cn/rke2/install.sh | INSTALL_RKE2_MIRROR=cn sh - systemctl enable rke2-server --now mkdir -pv ~/.kubeln -s /etc/rancher/rke2/rke2.yaml ~/.kube/configecho "export CONTAINER_RUNTIME_ENDPOINT=\"unix:///run/k3s/containerd/containerd.sock\"" >> ~/.bashrcecho "export CONTAINERD_ADDRESS=\"/run/k3s/containerd/containerd.sock\"" >> ~/.bashrcecho "export CONTAINERD_NAMESPACE=\"k8s.io\"" >> ~/.bashrcecho "export PATH=$PATH :/var/lib/rancher/rke2/bin" >> ~/.bashrcecho "source <(kubectl completion bash)" >> ~/.bashrccurl https://rancher-mirror.rancher.cn/helm/get-helm-3.sh | INSTALL_HELM_MIRROR=cn bash -s -- --version v3.19.0 echo "source <(helm completion bash)" >> ~/.bashrcexport NERDCTL_VERSION=2.1.3wget "https://files.m.daocloud.io/github.com/containerd/nerdctl/releases/download/v$NERDCTL_VERSION /nerdctl-$NERDCTL_VERSION -linux-amd64.tar.gz" tar Czvxf /usr/local/bin nerdctl-$NERDCTL_VERSION -linux-amd64.tar.gz && rm -rf nerdctl-$NERDCTL_VERSION -linux-amd64.tar.gz

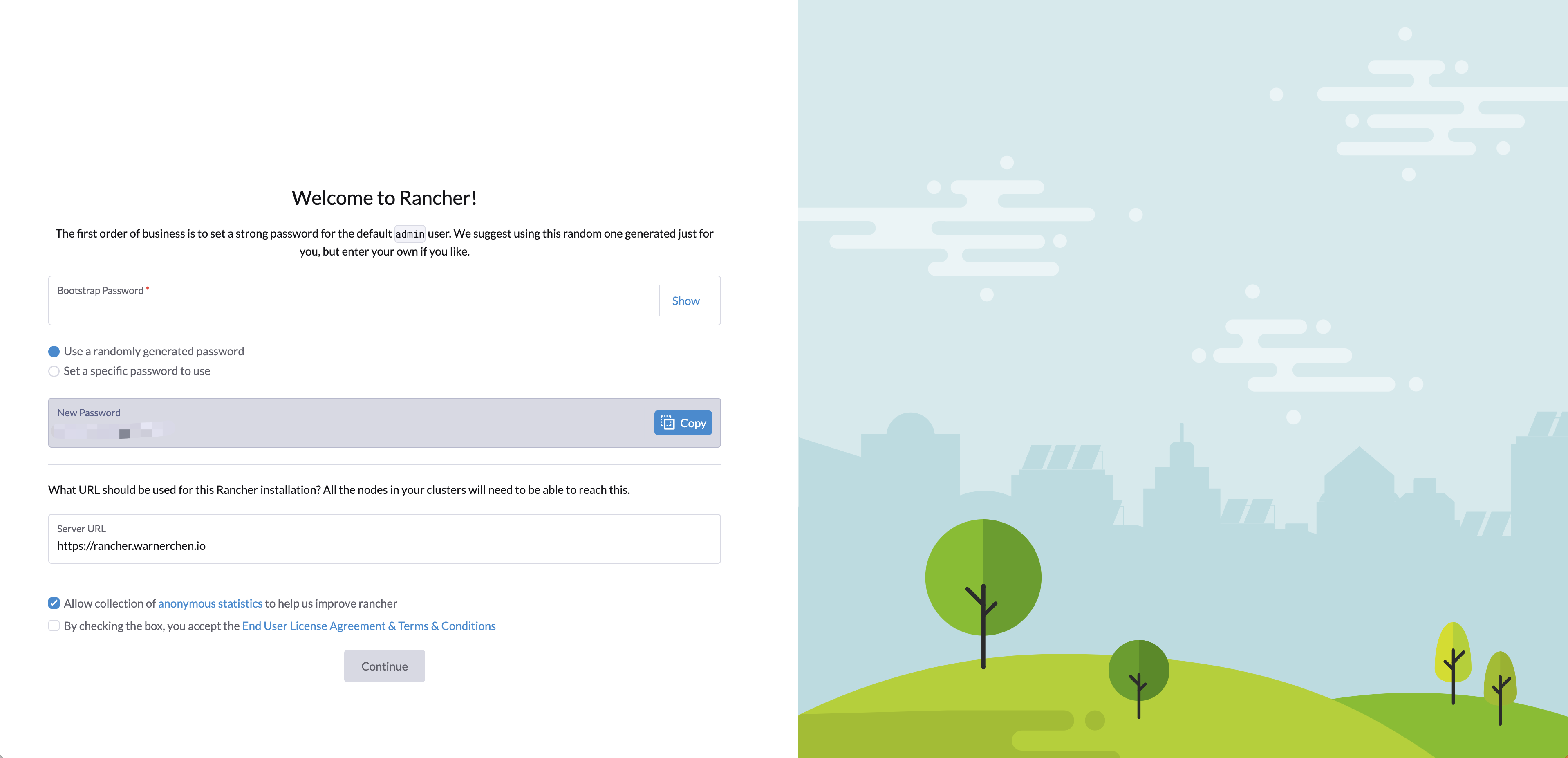

安装 Rancher 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 helm repo add rancher-stable https://releases.rancher.com/server-charts/stable helm repo add jetstack https://charts.jetstack.io helm repo update helm upgrade --install \ cert-manager jetstack/cert-manager \ --namespace cert-manager \ --create-namespace \ --version v1.19.2 \ --set crds.enabled=true helm upgrade --install rancher rancher-stable/rancher \ --namespace cattle-system \ --create-namespace \ --set hostname=xxx.com \ --set replicas=1 \ --set bootstrapPassword=xxx \ --set rancherImage=registry.cn-hangzhou.aliyuncs.com/rancher/rancher \ --set systemDefaultRegistry=registry.cn-hangzhou.aliyuncs.com

RKE2 默认会安装 Nginx Ingress Controller,监听节点的 80/443 端口,而安装 Rancher 的时候设置好 hostname 的话会创建一个 Ingress,所以可以通过该 Ingress 进行访问

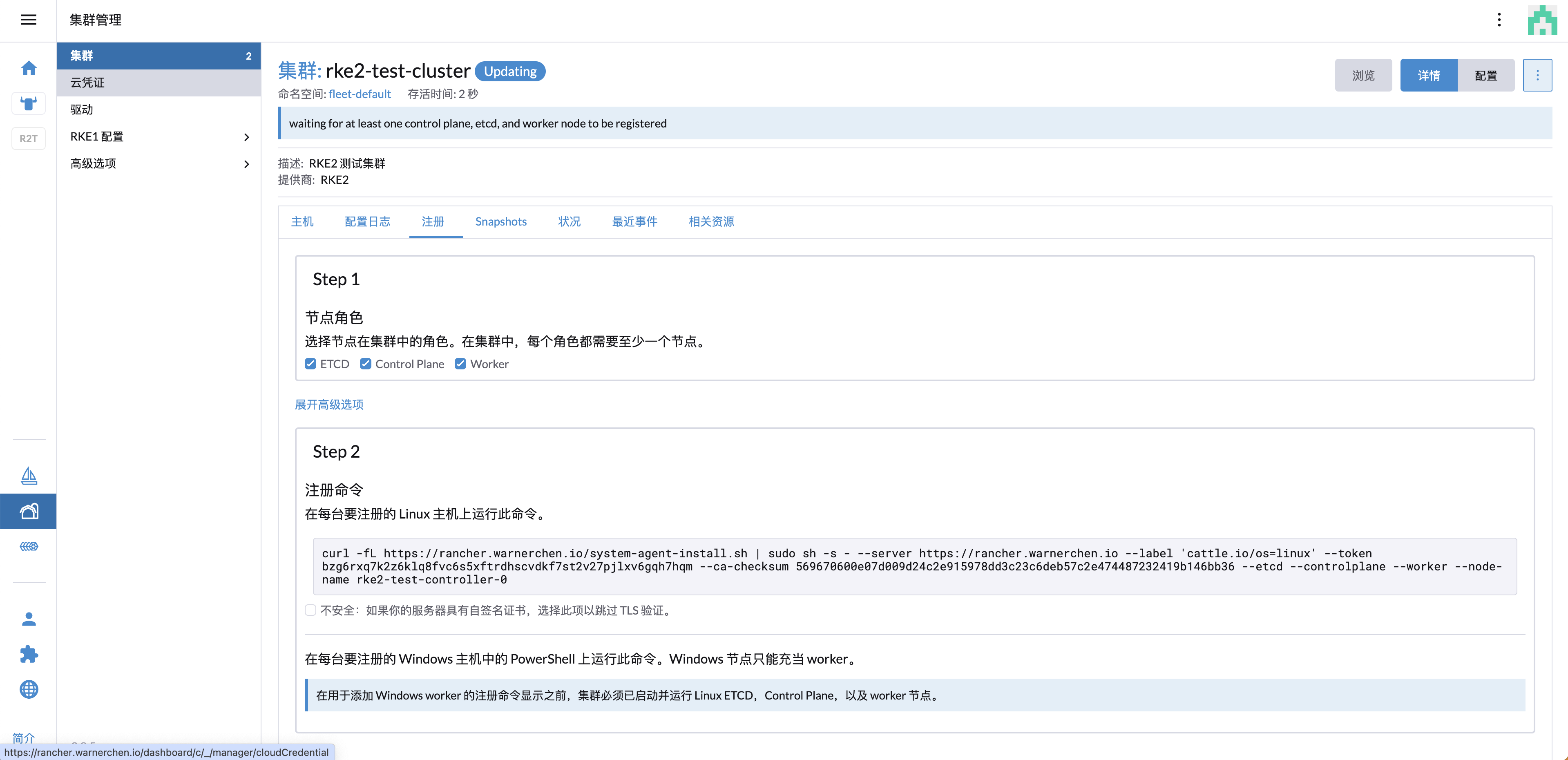

创建集群 在 UI 创建集群后,会提供注册命令,在节点上执行该命令进行注册

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 root@rke2-test-controller-0:~# curl --insecure -fL https://xxx.com/system-agent-install.sh | sudo sh -s - --server https://xxx.com --label 'cattle.io/os=linux' --token xxx --ca-checksum xxx --etcd --controlplane --worker --node-name rke2-test-controller-0 [INFO] Label: cattle.io/os=linux [INFO] Role requested: etcd [INFO] Role requested: controlplane [INFO] Role requested: worker [INFO] Using default agent configuration directory /etc/rancher/agent [INFO] Using default agent var directory /var/lib/rancher/agent [INFO] Determined CA is necessary to connect to Rancher [INFO] Successfully downloaded CA certificate [INFO] Value from https://xxx.com/cacerts is an x509 certificate [INFO] Successfully tested Rancher connection [INFO] Downloading rancher-system-agent binary from https://xxx.com/assets/rancher-system-agent-amd64 [INFO] Successfully downloaded the rancher-system-agent binary. [INFO] Downloading rancher-system-agent-uninstall.sh script from https://xxx.com/assets/system-agent-uninstall.sh [INFO] Successfully downloaded the rancher-system-agent-uninstall.sh script. [INFO] Generating Cattle ID [INFO] Successfully downloaded Rancher connection information [INFO] systemd: Creating service file [INFO] Creating environment file /etc/systemd/system/rancher-system-agent.env [INFO] Enabling rancher-system-agent.service Created symlink /etc/systemd/system/multi-user.target.wants/rancher-system-agent.service → /etc/systemd/system/rancher-system-agent.service. [INFO] Starting/restarting rancher-system-agent.service root@rke2-test-controller-0:~#

注册后发现 cattle-cluster-agent 一直在崩溃重启

1 2 3 4 5 6 root@rke2-test-controller-0:~# kubectl -n cattle-system get pod NAME READY STATUS RESTARTS AGE cattle-cluster-agent-767b67b66f-bcl2s 0/1 CrashLoopBackOff 5 (79s ago) 10m root@rke2-test-controller-0:~# kubectl -n cattle-system logs cattle-cluster-agent-767b67b66f-bcl2s -p ... ERROR: https://xxx.com/ping is not accessible (Could not resolve host: xxx.com)

这是由于该域名没有 DNS 去做解析,可以通过 CoreDNS 实现暂时的映射,然后重启即可

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 .:53 { errors health { lameduck 5 s } ready kubernetes cluster.local cluster.local in-addr.arpa ip6.arpa { pods insecure fallthrough in-addr.arpa ip6.arpa ttl 30 } prometheus 0.0 .0 .0 :9153 forward . /etc/resolv.conf cache 30 loop reload loadbalance hosts { 172.16 .170 .200 xxx.com fallthrough } }

集群 Ready

Monitoring 通过 UI 选择 monitoring helm chart 即可完成安装,会有一些基本的组件(e.g. prometheus/alertmanager…)

WebHook 配置 告警可以对接多种形式,WebHook 则是通过 AlertmanagerConfig 的 CR 完成配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 cat <<EOF | kubectl apply -f - apiVersion: monitoring.coreos.com/v1alpha1 kind: AlertmanagerConfig metadata: name: test-webhook namespace: default spec: receivers: - name: test-webhook webhookConfigs: - httpConfig: tlsConfig: {} sendResolved: false url: https://webhook.site/xxx route: groupBy: [] groupInterval: 5m groupWait: 30s matchers: [] repeatInterval: 4h EOF

Logging 通过 UI 选择 logging helm chart 即可完成安装,主要是使用了 Logging Operator 配置日志流水线

Logging Operator 会部署一个 FluentBit DaemonSet 用于收集日志,然后将数据传输到 Fluentd,再由 Fluentd 传到不同的 output

主要的 CR 有:

Flow: 是一个命名空间自定义资源,它使用过滤器和选择器将日志消息路由到对应的 Output 或者 ClusterOutput

ClusterFlow: 用于路由集群级别的日志消息

Output: 用于路由命名空间级别的日志消息

ClusterOutput: Flow 和 ClusterFlow 都可与其对接

部署 ES 和 Kibana 基于 ECK Operator 的能力,部署 ES 和 Kibana,后续可通过配置 OutPut 输出到 ES 中

1 2 3 kubectl create -f https://download.elastic.co/downloads/eck/2.14.0/crds.yaml kubectl apply -f https://download.elastic.co/downloads/eck/2.14.0/operator.yaml

安装 ES 和 Kibana,存储暂时用本地存储吧 - -

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 cat <<EOF | kubectl apply -f - apiVersion: elasticsearch.k8s.elastic.co/v1 kind: Elasticsearch metadata: name: logging namespace: cattle-logging-system spec: version: 7.15.2 nodeSets: - name: logging count: 1 config: node.store.allow_mmap: false EOF cat <<EOF | kubectl apply -f - apiVersion: kibana.k8s.elastic.co/v1 kind: Kibana metadata: name: logging namespace: cattle-logging-system spec: version: 7.15.2 count: 1 elasticsearchRef: name: logging EOF cat <<EOF | kubectl apply -f - apiVersion: networking.k8s.io/v1 kind: Ingress metadata: annotations: nginx.ingress.kubernetes.io/backend-protocol: HTTPS name: logging-kb namespace: cattle-logging-system spec: ingressClassName: nginx rules: - host: kibana.warnerchen.io http: paths: - backend: service: name: logging-kb-http port: number: 5601 path: / pathType: Prefix

创建 Flow 和 Output 需要先创建 Output

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 cat <<EOF | kubectl apply -f - apiVersion: v1 data: elastic: xxx kind: Secret metadata: name: logging-es-elastic-user namespace: default type: Opaque --- apiVersion: logging.banzaicloud.io/v1beta1 kind: Output metadata: name: output-to-es namespace: default spec: elasticsearch: host: logging-es-http.cattle-logging-system.svc.cluster.local index_name: ns-default password: valueFrom: secretKeyRef: key: elastic name: logging-es-elastic-user port: 9200 scheme: https ssl_verify: false ssl_version: TLSv1_2 suppress_type_name: false user: elastic EOF

创建 Flow,收集标签为 app=nginx 的 Pod 日志

1 2 3 4 5 6 7 8 9 10 11 12 13 14 cat <<EOF | kubectl apply -f - apiVersion: logging.banzaicloud.io/v1beta1 kind: Flow metadata: name: flow-for-default namespace: default spec: localOutputRefs: - output-to-es match: - select: labels: app: nginx EOF

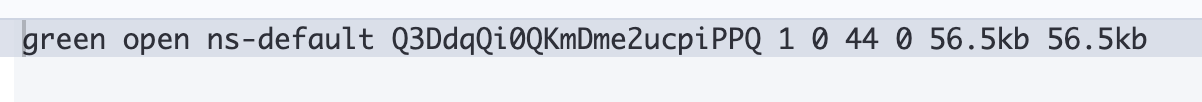

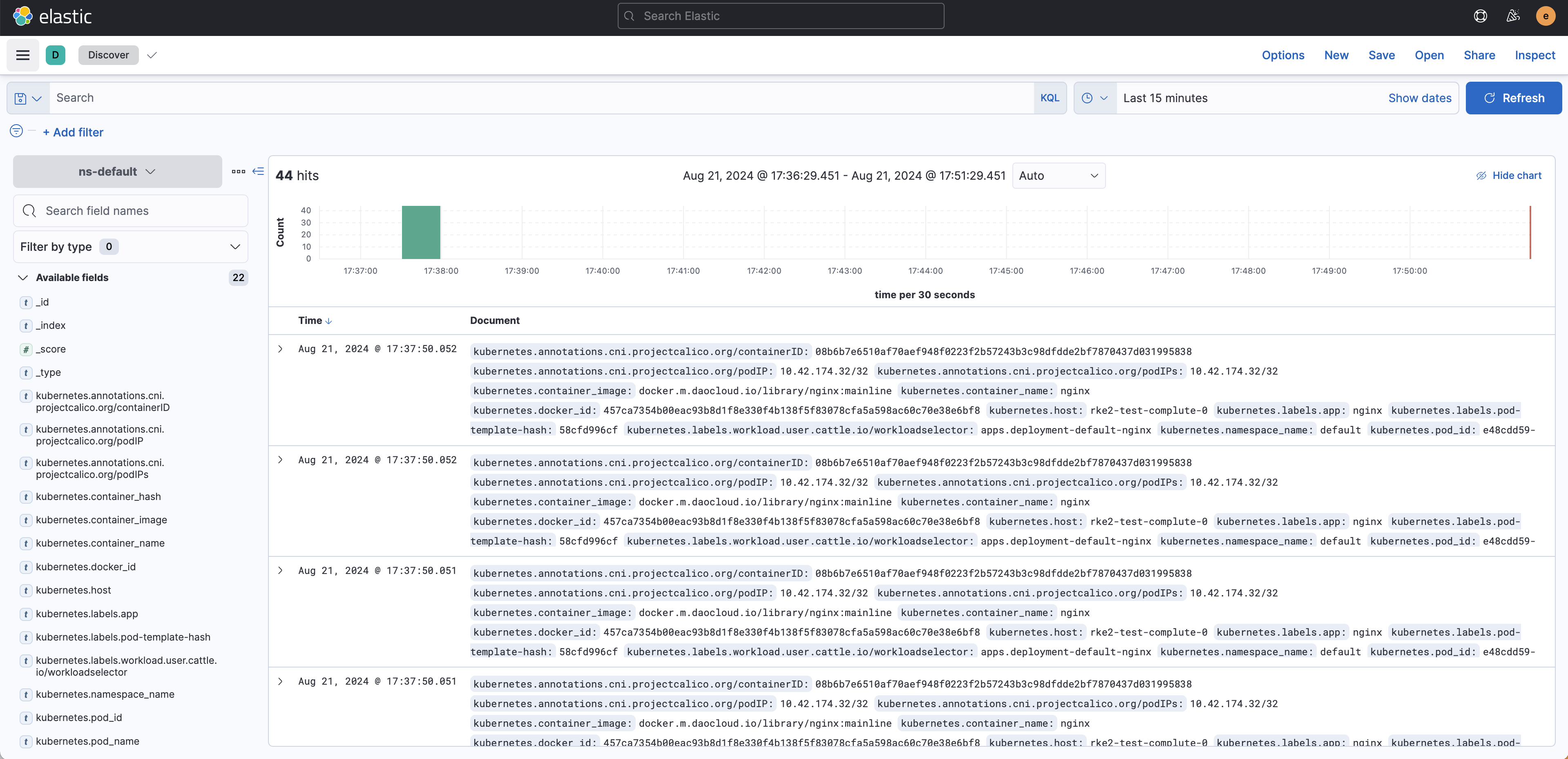

查看是否有对应的索引

创建 Pattern 查看日志

NeuVector 通过 UI 选择 NeuVector helm chart 即可完成安装

LongHorn 安装 LongHorn 之前,需要在所有节点上安装依赖

1 2 3 apt update apt -y install open-iscsi nfs-common systemctl enable iscsid --now

然后通过 UI 安装 LongHorn

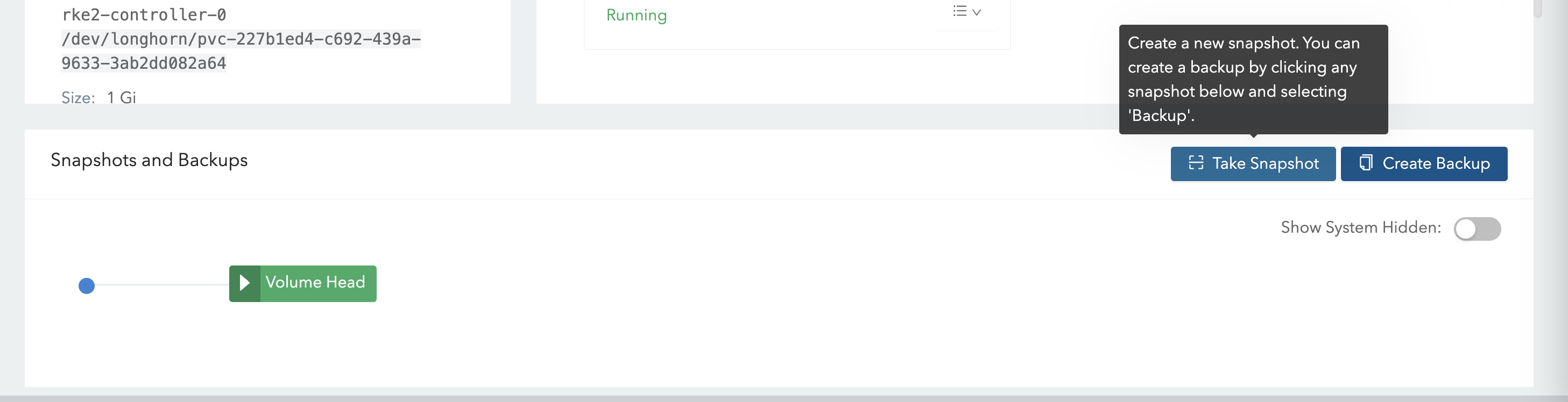

数据卷的快照和恢复 LongHorn 支持 SnapShot,可以直接创建快照和恢复

在 UI 中,创建一个快照

然后删除数据文件

1 kubectl exec -it nginx-7f6d5dcf8c-tvxcw -- rm -rf /data/test.txt

停止服务

1 kubectl scale deployment nginx --replicas=0

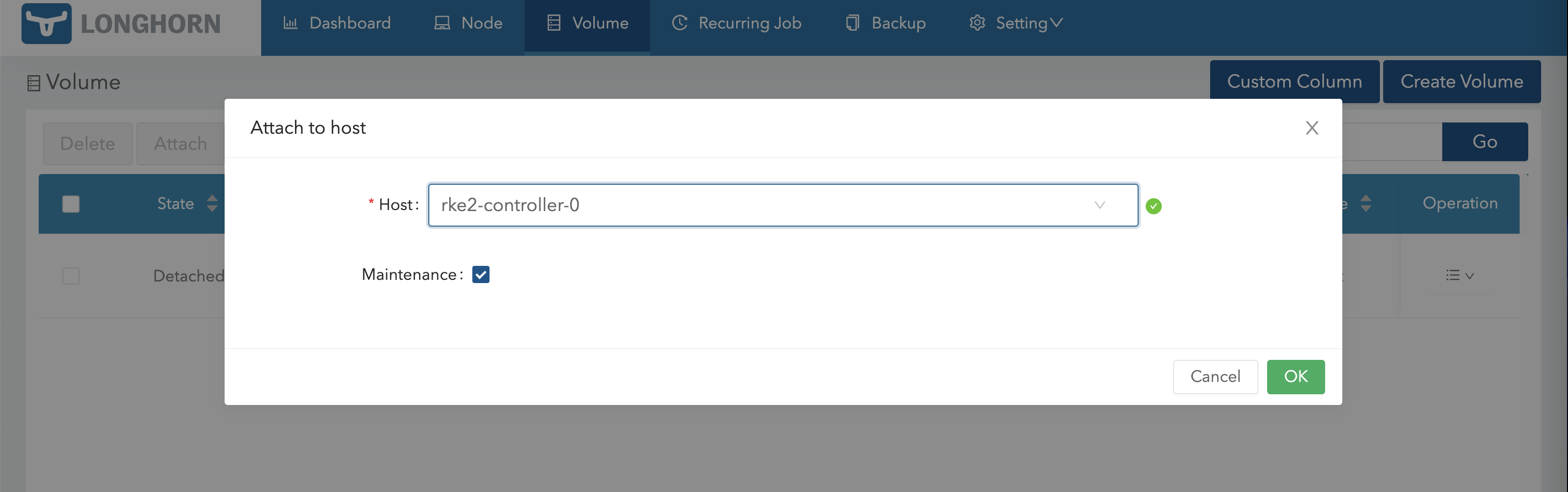

通过维护模式重新 Attach

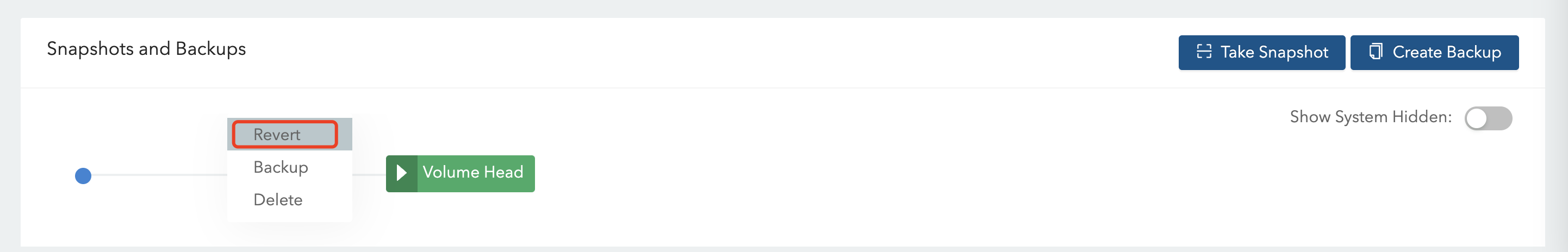

挂载后进入该 Volume,然后选择快照进行恢复

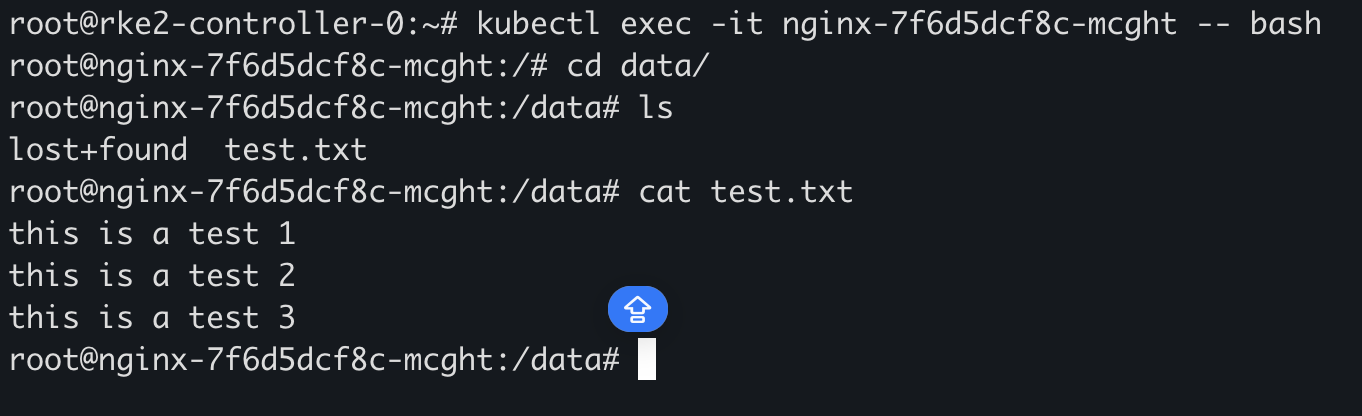

恢复后,Detach 该 Volume,启动服务后,即可看到数据的恢复

数据卷的备份和灾难恢复 测试通过 LongHorn 能力实现跨集群的数据备份和恢复,可备份至集群外的 S3 or NFS

MinIO 部署,使用了 operator 的能力

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 cat <<EOF | kubectl apply -f - apiVersion: v1 data: accesskey: bWluaW8= secretkey: VGpCcFkwVTNZVGcyU3c9PQ== kind: Secret metadata: name: backup-minio-secret namespace: default type: Opaque --- apiVersion: v1 data: config.env: ZXhwb3J0IE1JTklPX0JST1dTRVI9Im9uIgpleHBvcnQgTUlOSU9fUk9PVF9VU0VSPSJtaW5pbyIKZXhwb3J0IE1JTklPX1JPT1RfUEFTU1dPUkQ9IlRqQnBZMFUzWVRnMlN3PT0iCg== kind: Secret metadata: name: backup-minio-env-configuration namespace: default type: Opaque --- apiVersion: minio.min.io/v2 kind: Tenant metadata: name: backup-minio namespace: default spec: buckets: - name: longhorn configuration: name: backup-minio-env-configuration # credsSecret: # name: backup-minio-secret env: - name: MINIO_PROMETHEUS_AUTH_TYPE value: public - name: MINIO_SERVER_URL value: http://minio-hl.warnerchen.io image: quay.m.daocloud.io/minio/minio:RELEASE.2023-10-07T15-07-38Z initContainers: - command: - sh - -c - chown -R 1000:1000 /export/* || true image: quay.m.daocloud.io/minio/minio:RELEASE.2023-10-07T15-07-38Z name: change-permission securityContext: capabilities: add: - CHOWN volumeMounts: - mountPath: /export name: "0" pools: - name: pool-0 resources: limits: cpu: 500m memory: 500Mi requests: cpu: 50m memory: 100Mi servers: 1 volumeClaimTemplate: metadata: {} spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi volumesPerServer: 1 requestAutoCert: false serviceMetadata: minioServiceLabels: mcamel/exporter-type: minio --- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: minio namespace: default spec: rules: - host: minio.warnerchen.io http: paths: - backend: service: name: minio port: number: 443 path: / pathType: Prefix --- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: minio-hl namespace: default spec: rules: - host: minio-hl.warnerchen.io http: paths: - backend: service: name: backup-minio-hl port: number: 9000 path: / pathType: Prefix EOF

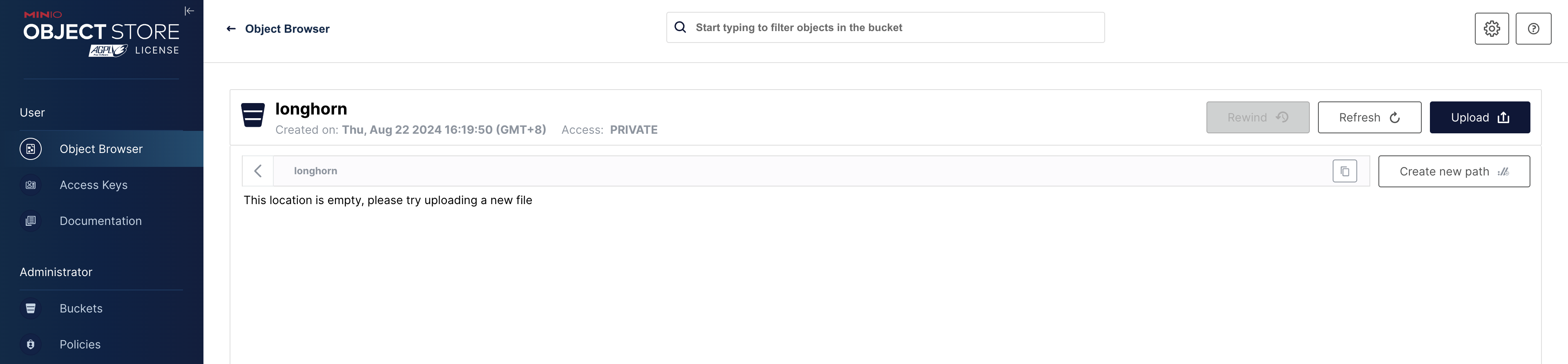

准备一个 Bucket

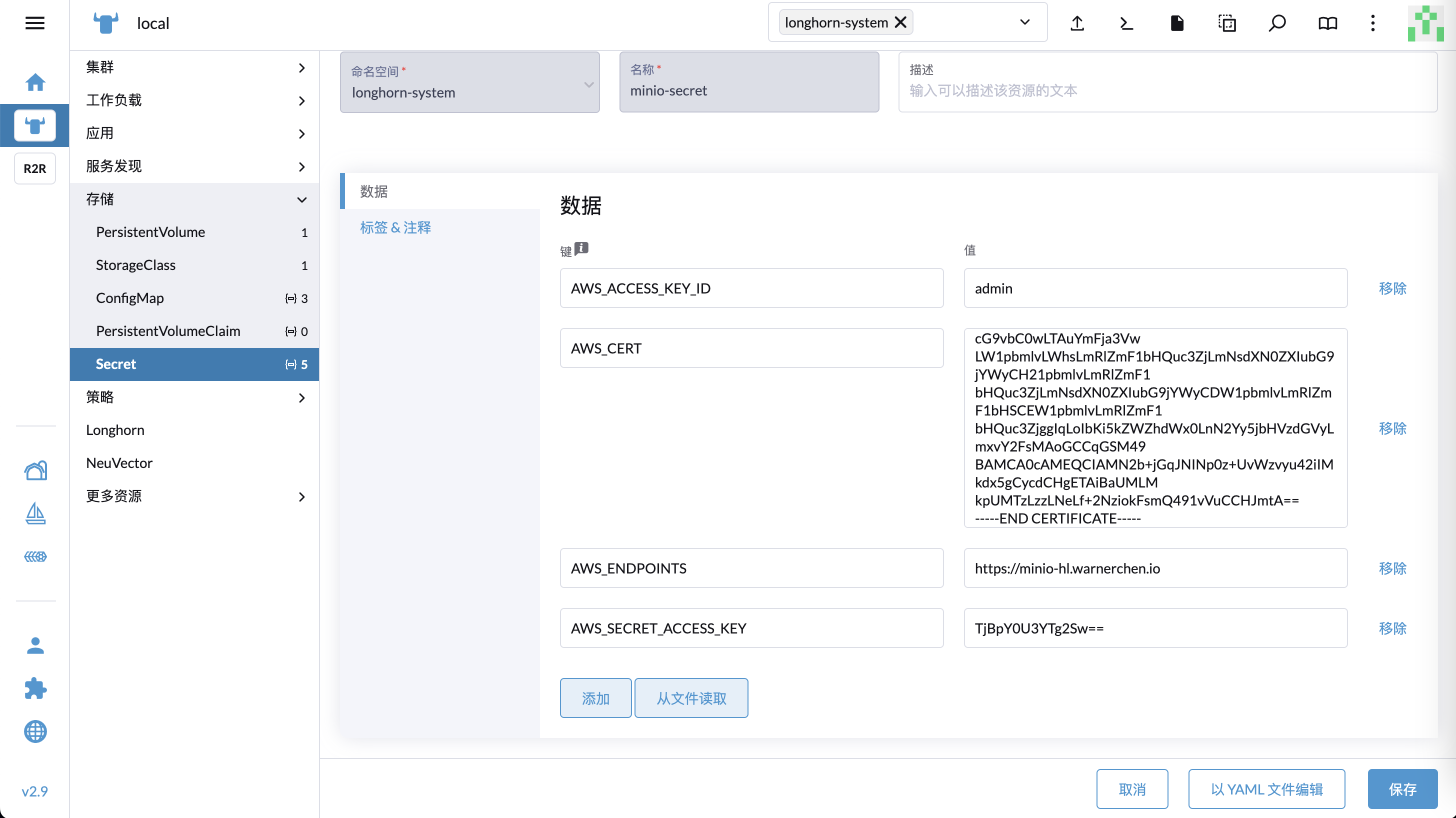

在两个集群的 longhorn-system 下创建 Secret,主要有这几个内容

AWS_ACCESS_KEY_ID: Access Key

AWS_SECRET_ACCESS_KEY: Secret Key

AWS_ENDPOINTS: S3 URL

AWS_CERT: 如果使用了自签证书则需要配置

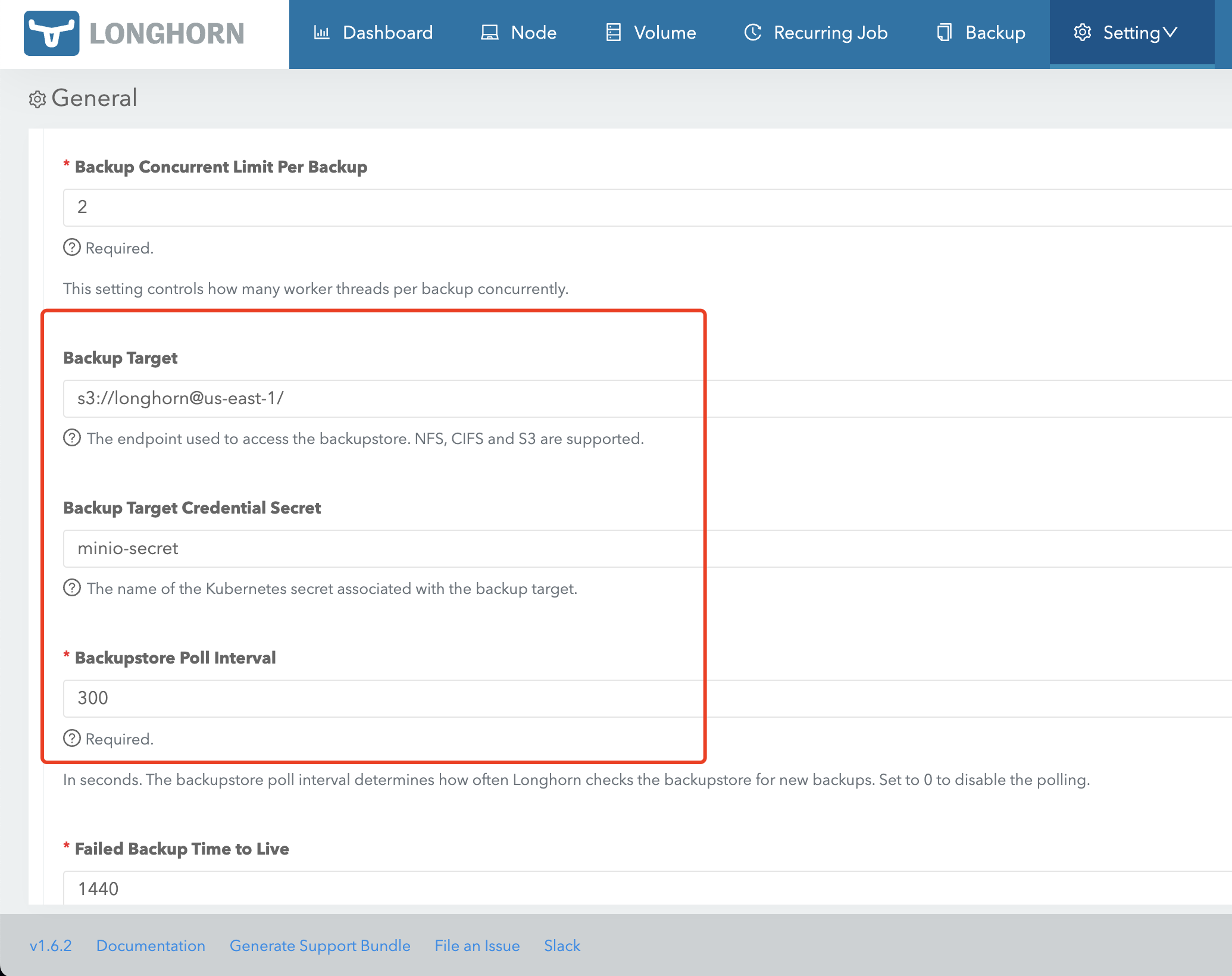

创建好 Secret 后,需要在 LongHorn UI 配置 Backup Target

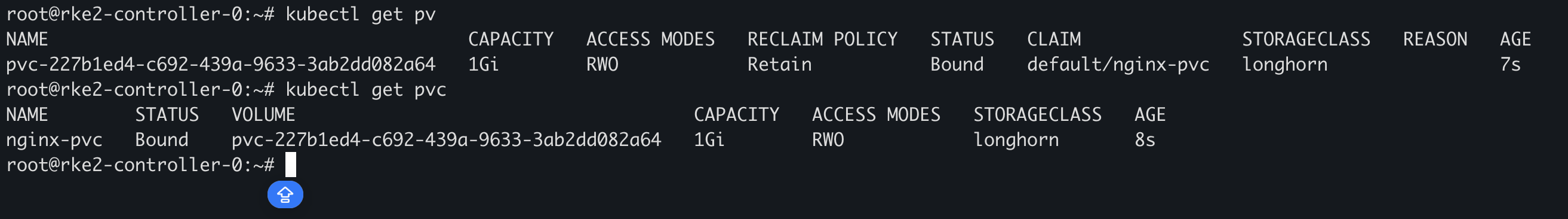

在任意集群做操作,创建一个 PVC

1 2 3 4 5 6 7 8 9 10 11 12 13 14 cat <<EOF | kubectl apply -f - apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nginx-pvc namespace: default spec: accessModes: - ReadWriteOnce resources: requests: storage: 1Gi storageClassName: longhorn EOF

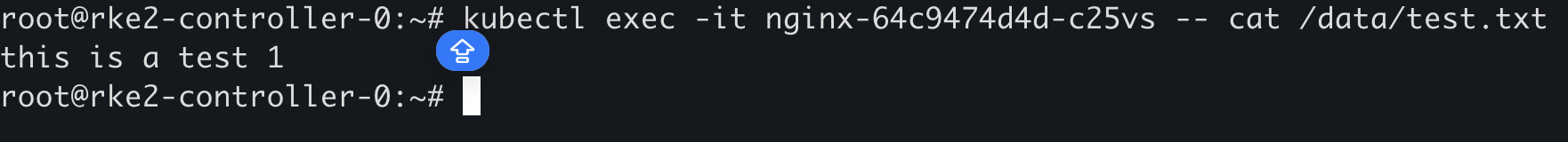

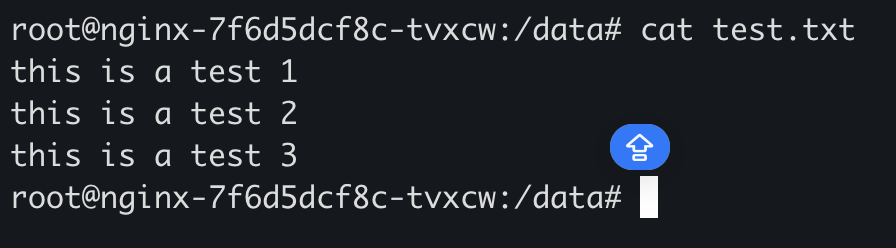

给 Nginx 挂载后,随意写入一些数据

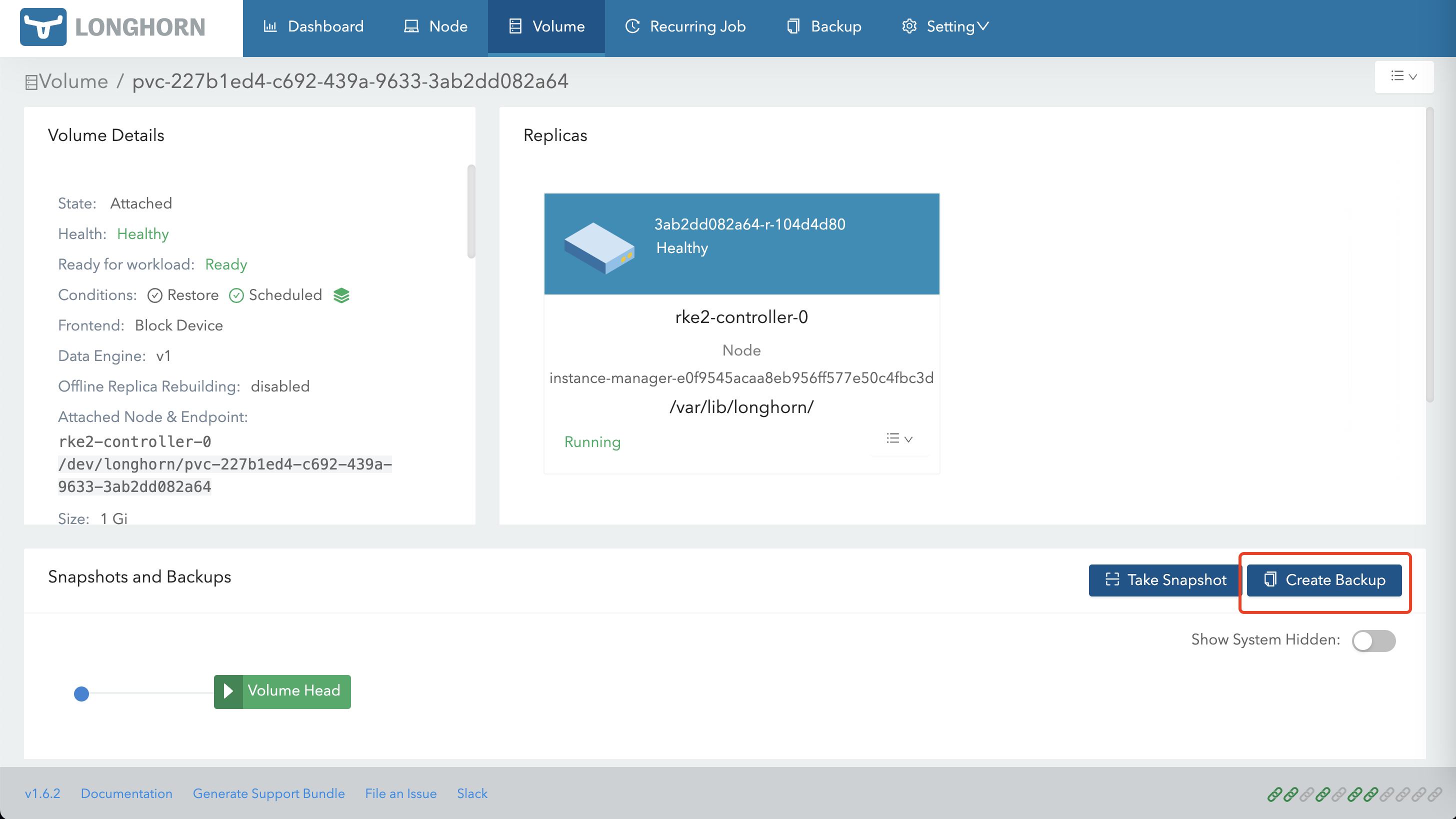

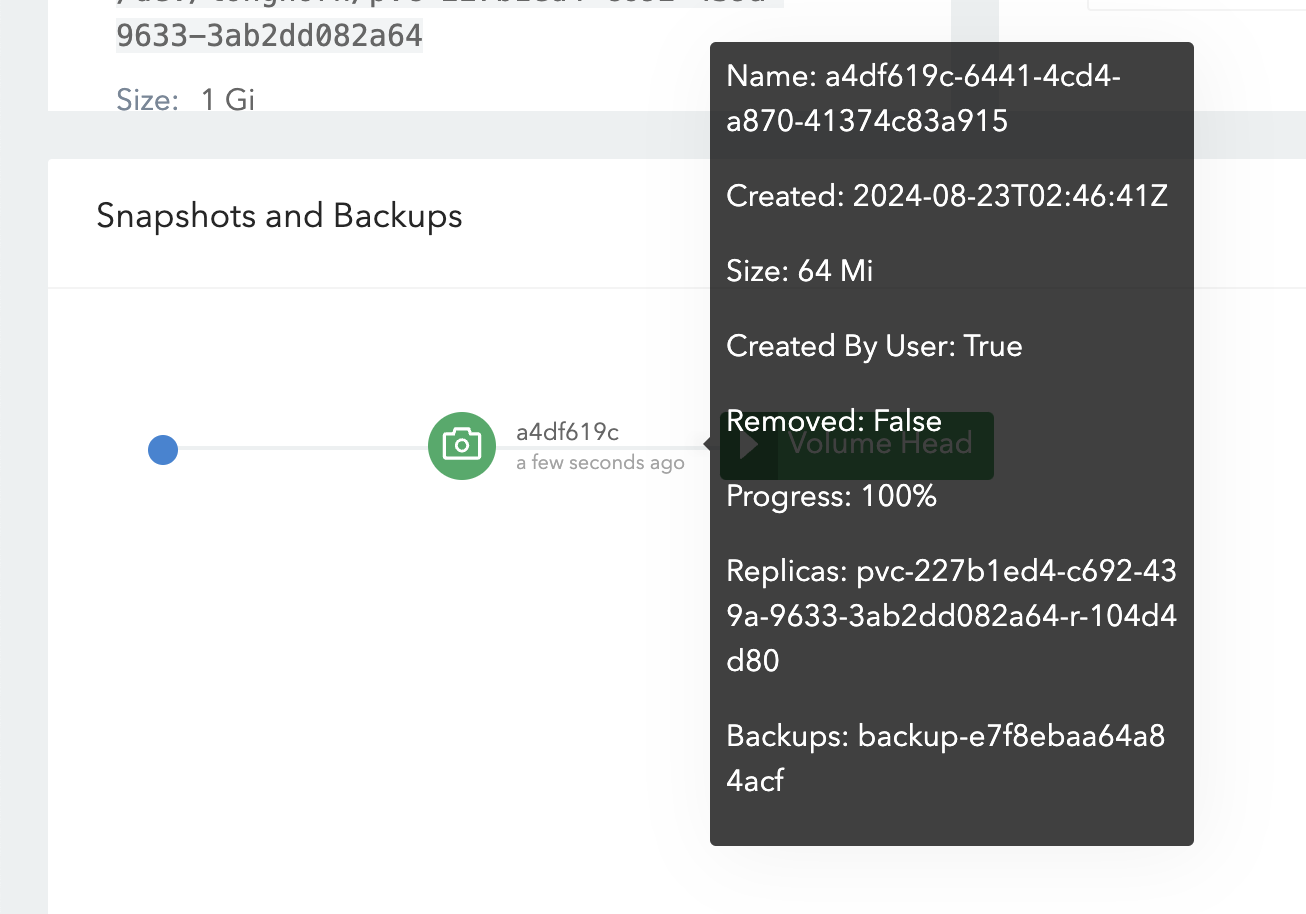

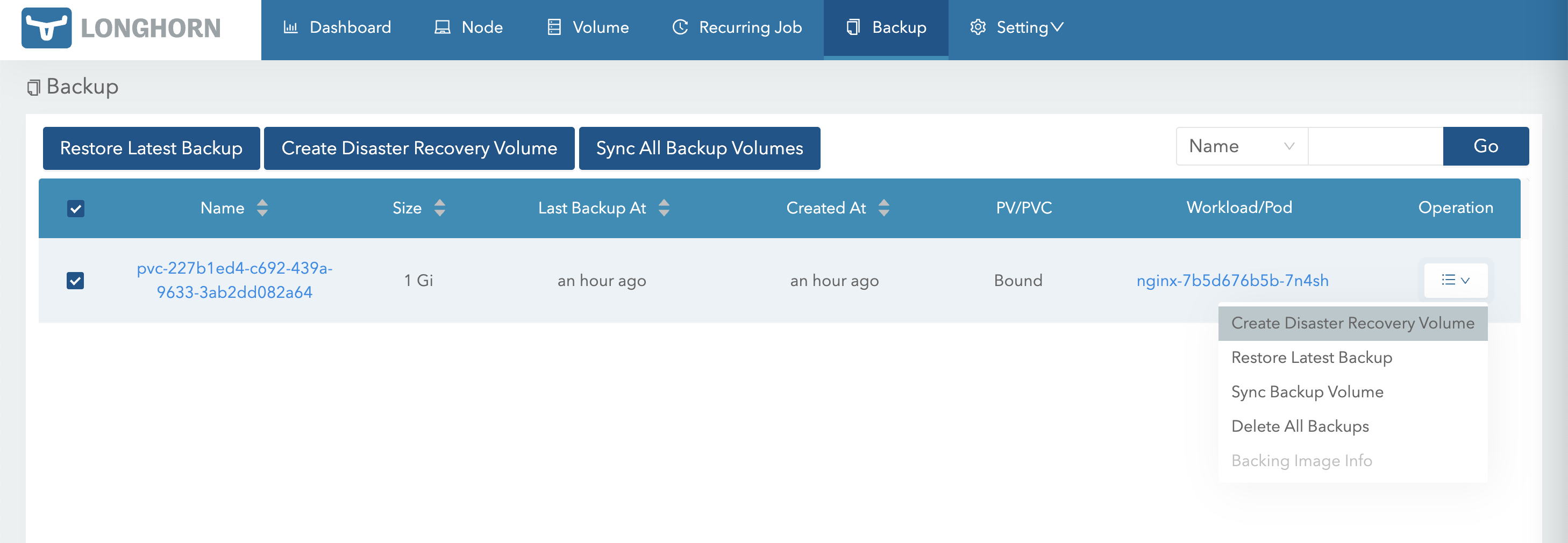

在该集群的 LongHorn 创建一个备份

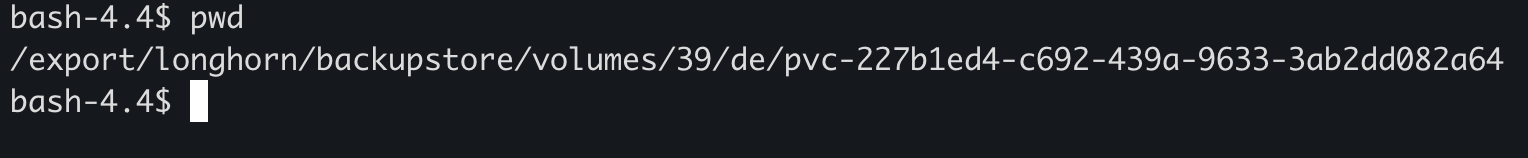

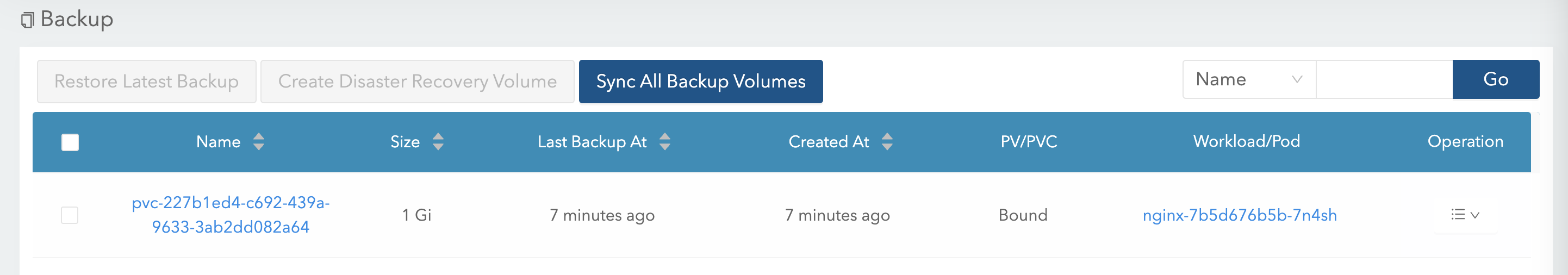

在 MinIO 就可以看到这个备份

至此,两个集群的 LongHorn 都是可以看到这个备份的,这是因为使用了同一个 Backup Target

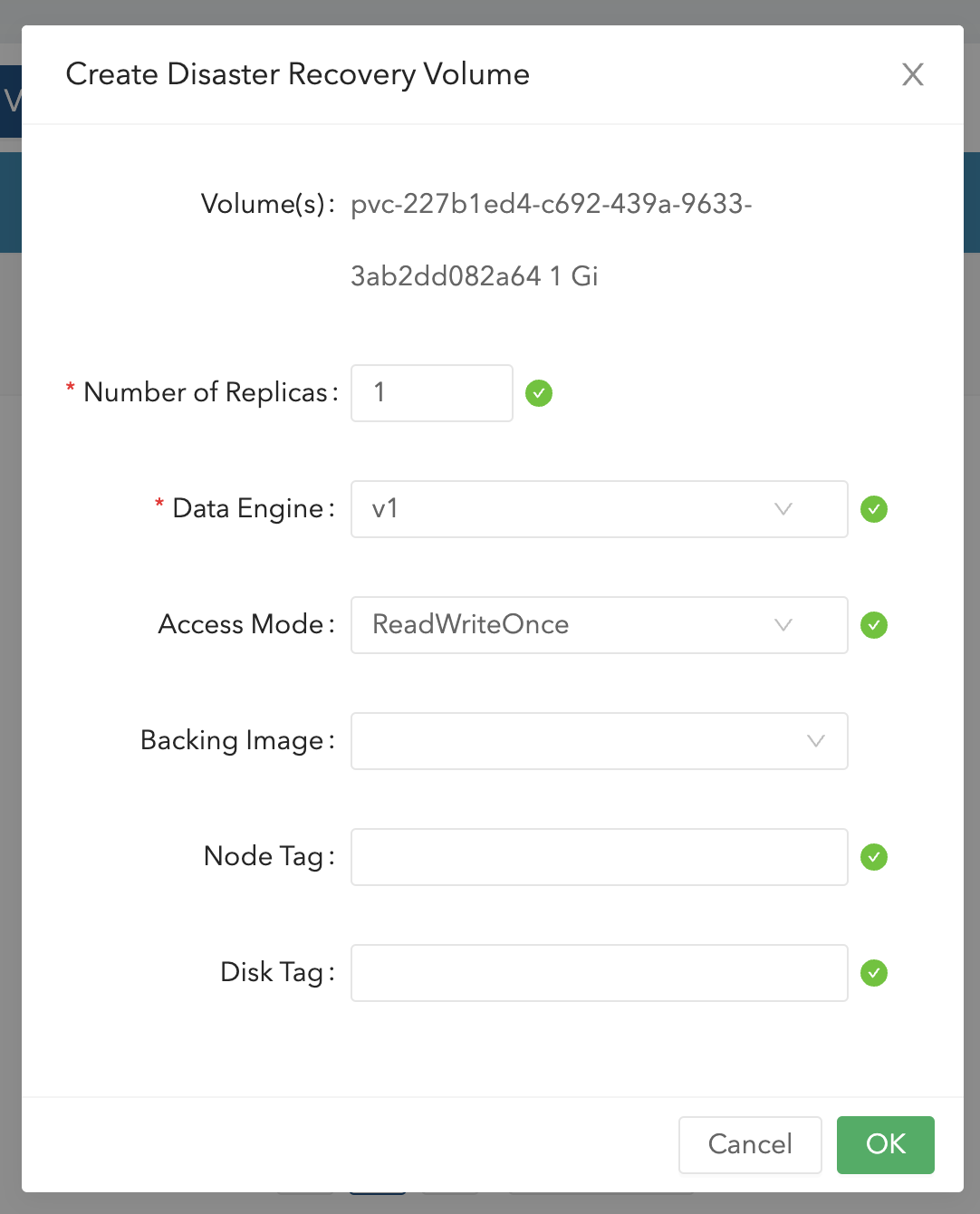

在另一个集群,通过此备份创建一个 Volume

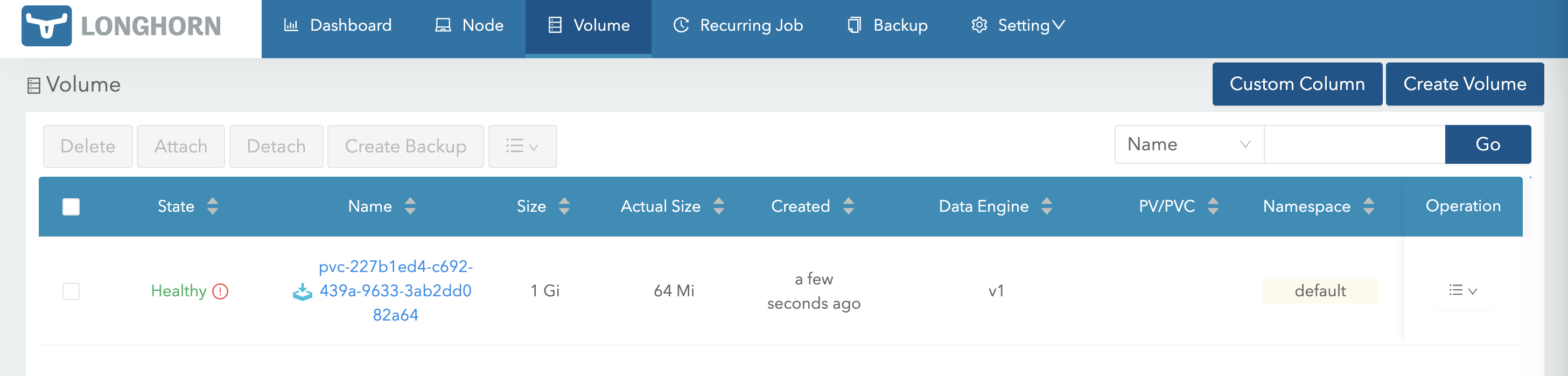

创建好后即可看到该 Volume,此时如果有更多数据写入 Nginx,Volume 也会自动进行同步

当一侧集群宕机,或者服务不可用时,可以使用该 Volume 进行恢复

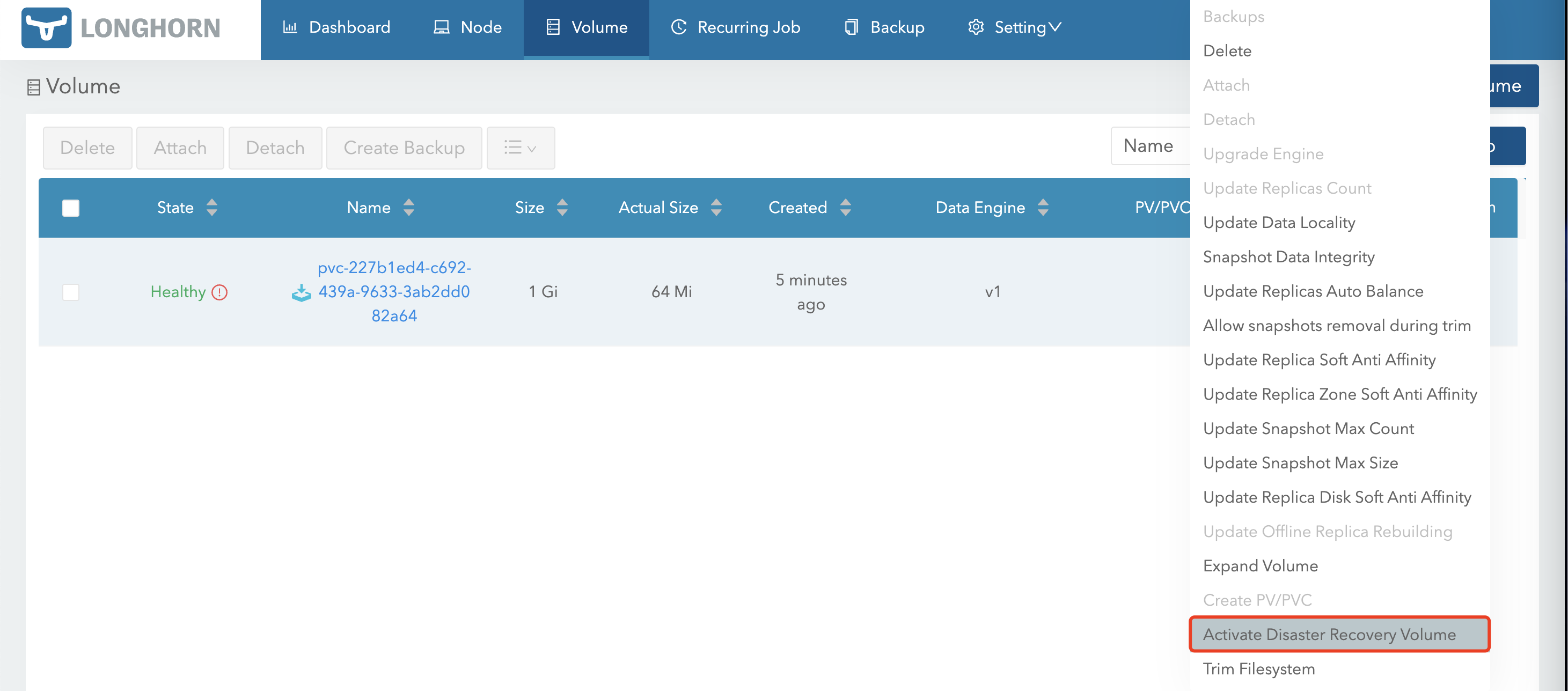

首先需要激活这个 Volume

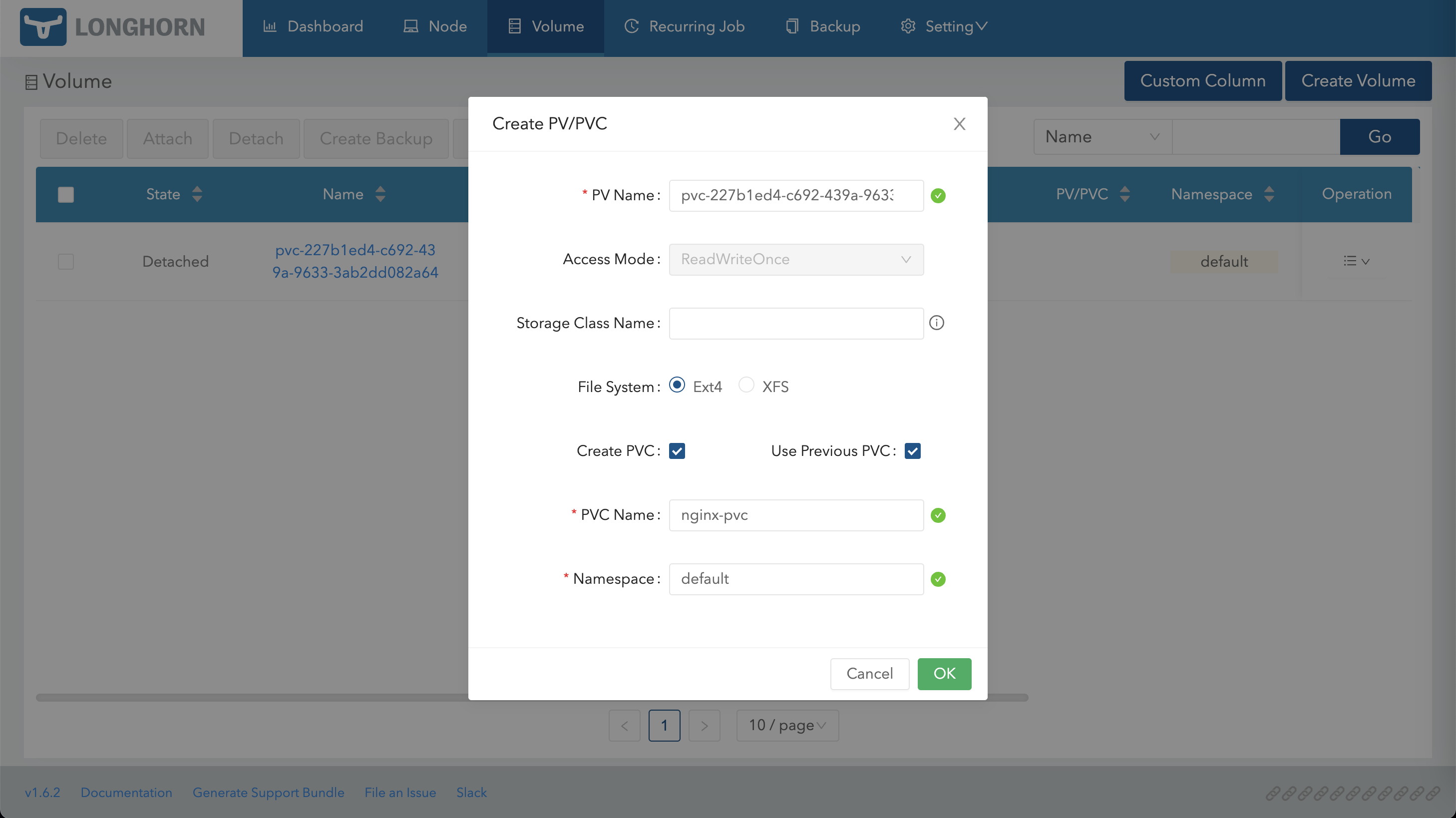

激活后使用这个 Volume 创建 PV/PVC

在集群中就可以看到,然后通过这个 PV/PVC 重新创建 Nginx,可以看到原本的数据

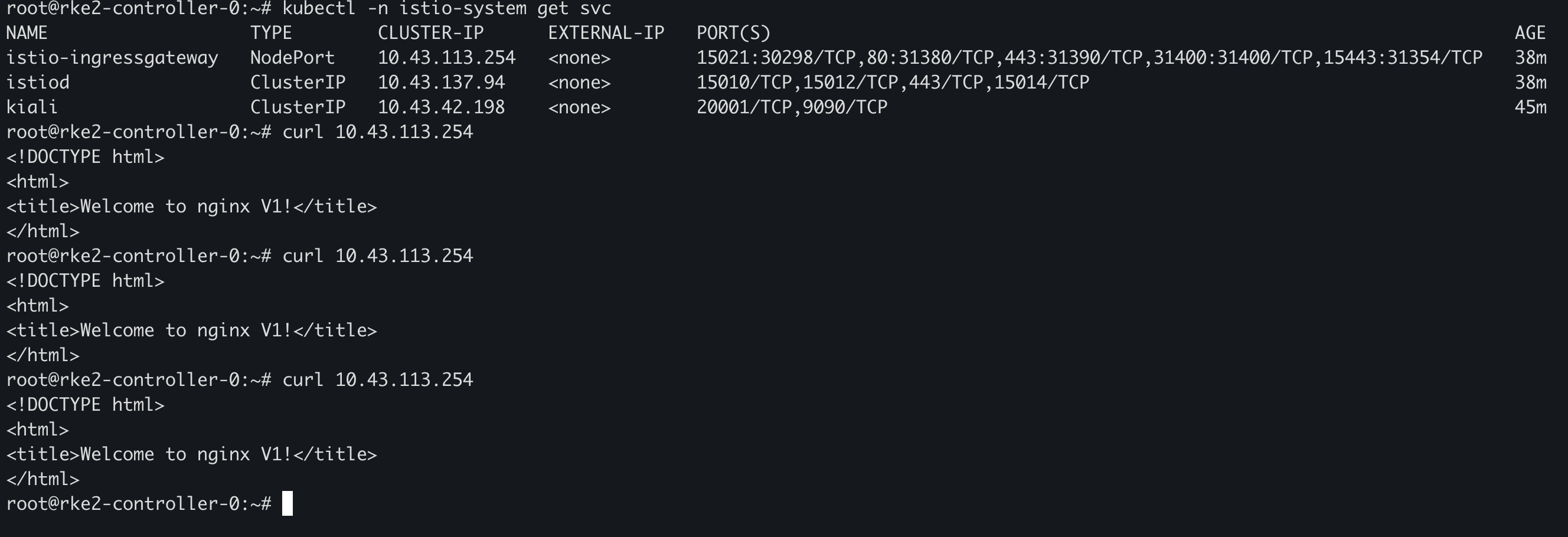

Istio 在 UI 中可以直接选择 Istio 进行安装

部署两个版本的 Nginx

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 cat <<EOF | kubectl apply -f - apiVersion: v1 data: index.html.v1: | <!DOCTYPE html> <html> <title>Welcome to nginx V1!</title> </html> index.html.v2: | <!DOCTYPE html> <html> <title>Welcome to nginx V2!</title> </html> kind: ConfigMap metadata: name: nginx-conf namespace: default --- apiVersion: v1 kind: Service metadata: name: nginx namespace: default spec: ports: - name: port-80 port: 80 protocol: TCP targetPort: 80 selector: app: nginx type: ClusterIP --- apiVersion: apps/v1 kind: Deployment metadata: labels: app: nginx version: v1 name: nginx-v1 namespace: default spec: selector: matchLabels: app: nginx version: v1 template: metadata: labels: app: nginx version: v1 sidecar.istio.io/inject: 'true' spec: containers: - image: docker.io/library/nginx:mainline imagePullPolicy: IfNotPresent name: nginx-v1 volumeMounts: - mountPath: /usr/share/nginx/html/index.html name: nginx-conf subPath: index.html.v1 volumes: - configMap: defaultMode: 420 name: nginx-conf name: nginx-conf --- apiVersion: apps/v1 kind: Deployment metadata: labels: app: nginx version: v2 name: nginx-v2 namespace: default spec: selector: matchLabels: app: nginx version: v2 template: metadata: labels: app: nginx version: v2 sidecar.istio.io/inject: 'true' spec: containers: - image: docker.io/library/nginx:mainline imagePullPolicy: IfNotPresent name: nginx-v2 volumeMounts: - mountPath: /usr/share/nginx/html/index.html name: nginx-conf subPath: index.html.v2 volumes: - configMap: defaultMode: 420 name: nginx-conf name: nginx-conf EOF

创建 Istio Gateway

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 cat <<EOF | kubectl apply -f - apiVersion: networking.istio.io/v1alpha3 kind: Gateway metadata: name: nginx-gateway spec: selector: istio: ingressgateway servers: - port: number: 80 name: http protocol: HTTP hosts: - "*" EOF

创建 Destination Rule

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 cat <<EOF | kubectl apply -f - apiVersion: networking.istio.io/v1alpha3 kind: DestinationRule metadata: name: nginx spec: host: nginx subsets: - name: v1 labels: version: v1 - name: v2 labels: version: v2 EOF

创建 Virtual Service,先将流量全部转发到 Nginx V1

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 cat <<EOF | kubectl apply -f - apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: nginx spec: hosts: - "*" gateways: - nginx-gateway http: - match: - uri: prefix: / route: - destination: host: nginx port: number: 80 subset: v1 weight: 100 EOF

通过 Istio Gateway 访问 Nginx,会发现返回都是 V1 版本

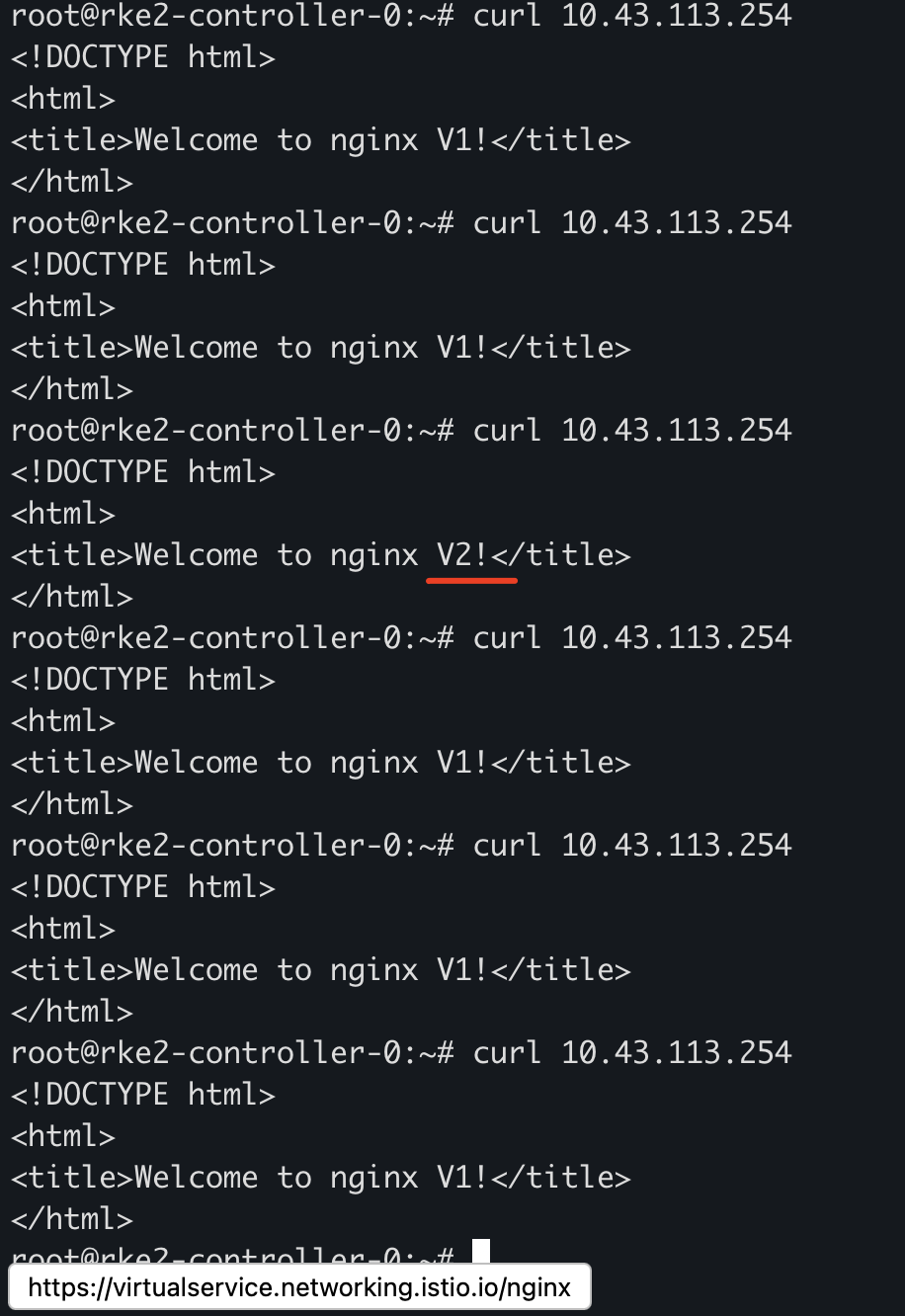

修改 Virtual Service,将 20% 的流量转发至 V2

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 cat <<EOF | kubectl apply -f - apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: nginx spec: hosts: - "*" gateways: - nginx-gateway http: - match: - uri: prefix: / route: - destination: host: nginx port: number: 80 subset: v1 weight: 80 - destination: host: nginx port: number: 80 subset: v2 weight: 20 EOF

可以看到会有部份流量转发至 V2

熔断也是通过 Destination Rule 实现

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 cat <<EOF | kubectl apply -f - apiVersion: networking.istio.io/v1beta1 kind: DestinationRule metadata: name: nginx-circuit-breaker spec: host: nginx trafficPolicy: connectionPool: http: # HTTP1 最大等待请求数 http1MaxPendingRequests: 1 # 每个连接的 HTTP 最大请求数 maxRequestsPerConnection: 1 tcp: # TCP 最大连接数 maxConnections: 1 EOF

K3s K3s 部署

1 2 3 4 5 6 7 8 mkdir -pv /etc/rancher/k3scat > /etc/rancher/k3s/config.yaml <<EOF token: 12345 system-default-registry: registry.cn-hangzhou.aliyuncs.com EOF curl -sfL https://rancher-mirror.rancher.cn/k3s/k3s-install.sh | INSTALL_K3S_MIRROR=cn sh -